Links for 2026-01-14

Human-Like Memory for AI

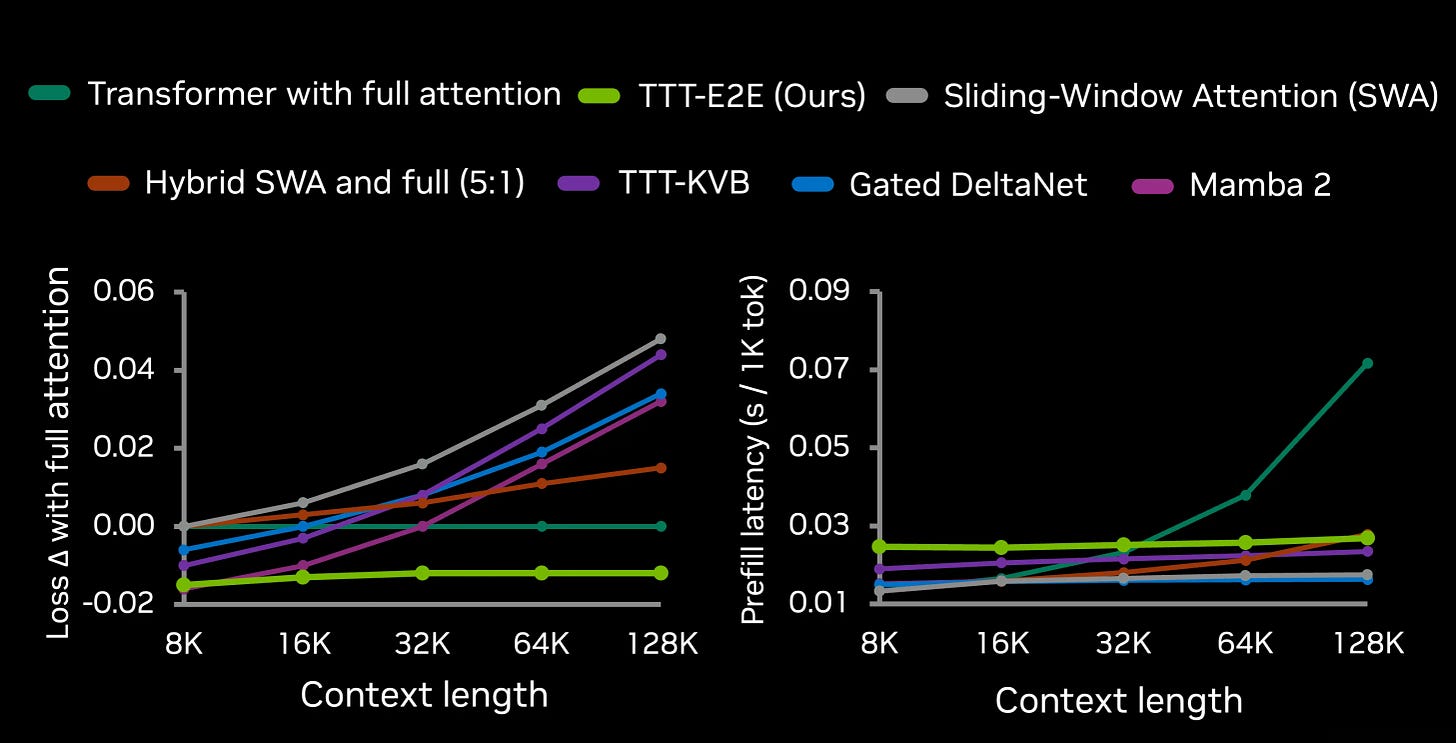

Nvidia researchers developed a new method called TTT-E2E that mimics how humans learn. They use the analogy of a university lecture: years later, you likely won’t remember the professor’s exact words (perfect recall), but you retain the skills and intuition you learned (compressed knowledge).

Instead of just temporarily holding your conversation in a “short-term memory” buffer, this new method actually trains itself on your conversation while it is happening.

Usually, an AI stops learning once it is released to the public. This new model continues to learn and update its internal “brain” (weights) with every new sentence it reads.

By treating the current context (like a long book or code file) as training data, the AI compresses that information into its permanent understanding. This allows it to “understand” a massive amount of information without needing to keep a perfect log of every single word.

Performance: It performs just as well as the heavy, slow models (Transformers) at understanding long strings of text. It also runs at a constant speed, no matter how much text you feed it. For very long inputs (e.g., 128,000 words/tokens), it is roughly 2.7 times faster than standard models. For massive inputs (2 million tokens), it can be 35 times faster.

See also:

Conditional Memory via Scalable Lookup: A New Axis of Sparsity for Large Language Models https://github.com/deepseek-ai/Engram/blob/main/Engram_paper.pdf

Titans + MIRAS: Helping AI have long-term memory https://research.google/blog/titans-miras-helping-ai-have-long-term-memory/

SimpleMem: Efficient Lifelong Memory for LLM Agents https://arxiv.org/abs/2601.02553

Recursive Language Models https://arxiv.org/abs/2512.24601v1

End-to-End Test-Time Training for Long Context https://test-time-training.github.io/e2e.pdf

AI for math

Lies, Damned Lies, and Proofs: Formal Methods are not Slopless https://www.lesswrong.com/posts/rhAPh3YzhPoBNpgHg/lies-damned-lies-and-proofs-formal-methods-are-not-slopless

From 2.8% to 100%: Automated Proof Verification with Aristotle https://igorrivin.github.io/research/polya-szego-aristotle/ [Aristotle’s waitlist is gone, and now anyone can sign up and immediately get access. https://aristotle.harmonic.fun/]

AxiomProver Solves All Problems at Putnam 2025: Proof Release & Commentary https://axiommath.ai/territory/from-seeing-why-to-checking-everything

Terence Tao on the emergence of AI-powered write–rewrite cycles for mathematical exposition. https://mathstodon.xyz/@tao/115855852706322322

Terence Tao: “I can honestly say I learned something from Aristotle; a minor thing to be sure, but still useful.” https://www.erdosproblems.com/forum/thread/679#post-3050

Gemini helps prove a novel theorem in algebraic geometry

Google announces that a novel theorem in algebraic geometry was proved with substantial help from an internal math-specialized version of Gemini.

The solution was not in the training data:

…the model outputs [particularly from FullProof] do not appear to the authors to be that close to those or (to the best of our knowledge) any other pre-existing sources. So, absent some future discovery to the contrary, the model’s contribution appears to involve a genuine combination of synthesis, retrieval, generalization and innovation of these existing techniques.

Professor Ravi Vakil, Stanford University, president of the American Mathematical Society:

We integrated Gemini into various stages of the project to empirically test its effectiveness. In many instances, it proved useful in familiar ways: identifying connections to cross-disciplinary papers, writing data-generation code, and verifying minor lemmas. However, the most striking experience was how it propelled the project forward intellectually.

At a pivotal step in our investigation, we asked Gemini to verify a result we expected to be true. While its outputs generally required substantial human oversight to distinguish valid insights from errors, this specific proof was rigorous, correct, and elegant. The clarity of the exposition revealed a latent structure we hadn’t previously foreseen, suggesting the result held in a much more general setting-which ultimately led us to our final result.

As someone familiar with the literature, I found that Gemini’s argument was no mere repackaging of existing proofs; it was the kind of insight I would have been proud to produce myself. While I might have eventually reached this conclusion on my own, I cannot say so with certainty. My primary takeaway is how meaningful mathematical progress emerged from this genuine synergy between human ingenuity and Gemini’s contributions.

Paper: https://arxiv.org/abs/2601.07222

Source of the quote: Adam Brown from Google DeepMind https://x.com/A_G_I_Joe/status/2011213878395617571

AI

The Molecular Structure of Thought: Mapping the Topology of Long Chain-of-Thought Reasoning https://arxiv.org/abs/2601.06002

MemRL: Self-Evolving Agents via Runtime Reinforcement Learning on Episodic Memory https://arxiv.org/abs/2601.03192

Dr. Zero: Self-Evolving Search Agents without Training Data https://arxiv.org/abs/2601.07055

A method to learn these latent action world models using “in-the-wild” videos (real-world footage that is diverse, messy, and uncurated). https://arxiv.org/abs/2601.05230

1X World Model | From Video to Action: A New Way Robots Learn https://www.1x.tech/discover/world-model-self-learning

ShowUI-π: Flow-based Generative Models as GUI Dexterous Hands https://arxiv.org/abs/2512.24965

How does scaling up neural networks change what they learn? On neural scaling and the quanta hypothesis https://ericjmichaud.com/quanta/

An FAQ on Reinforcement Learning Environments https://epochai.substack.com/p/an-faq-on-reinforcement-learning

Cowork: Claude Code for the rest of your work. Cowork lets you complete non-technical tasks much like how developers use Claude Code. https://www.lesswrong.com/posts/fm2N4cws8nbdmfGux/claude-coworks

On the Origins of Algorithmic Progress in AI https://mitfuturetech.substack.com/p/on-the-origins-of-algorithmic-progress

The Gentle Singularity; The Fast Takeoff https://www.prinzai.com/p/the-gentle-singularity-the-fast-takeoff

What do experts and superforecasters think about the future of AI research and development? https://forecastingresearch.substack.com/p/what-experts-and-superforecasters

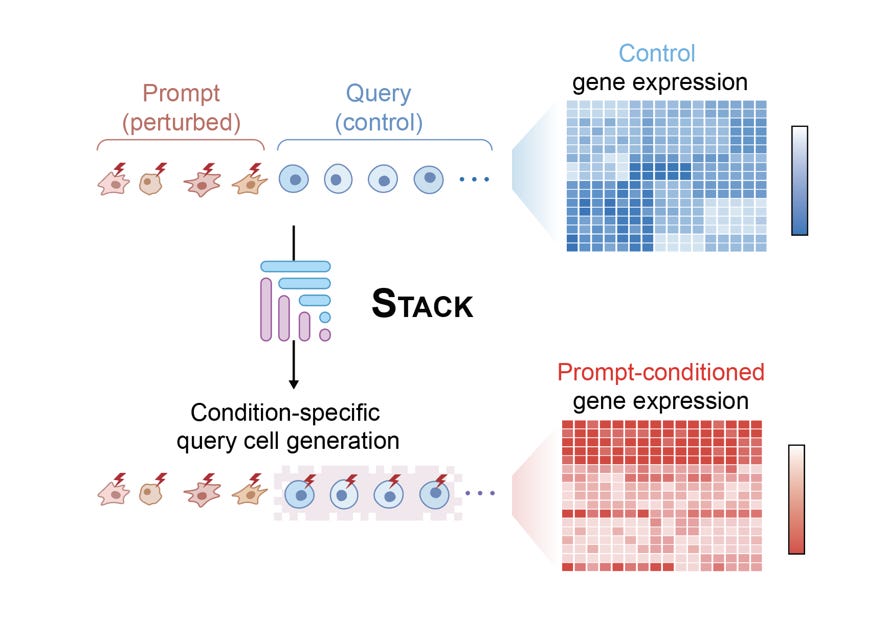

STACK: An open-source artificial intelligence model designed to simulate how human cells behave under different conditions.

Scientists want to know how every type of cell in the human body reacts to every possible drug or disease. However, testing every combination physically in a lab would take years and cost millions of dollars. STACK acts as a “virtual cell model” that can predict these reactions digitally, even for scenarios it has never explicitly seen before.

Unlike previous models that look at cells in isolation, STACK looks at “sets” of cells to understand their environment. Just as a word’s meaning changes based on the rest of the sentence, a cell’s behavior changes based on the cells around it.

When compared to real laboratory experiments, the model’s predictions were found to capture biologically meaningful and accurate effects.

Read more: https://arcinstitute.org/news/foundation-model-stack

Skynet

The Department of War:

Military AI is going to be a race for the foreseeable future, and therefore speed wins.

The Secretary of War directs the Department of War to accelerate “America’s Military AI Dominance” by transforming into an “AI-first” warfighting force.

---

I am old enough to remember when people worried about AI risks would reject any mentions of Skynet because that’s Hollywood and unrealistic. And nobody would build humanoid robots because that’s stupid. Also, everyone would obviously lock AI into a box without an internet connection. The risk is that it could escape by convincing humans to let it out.

Turns out Hollywood was right.

But surely, we have the common sense not to hand the AI the nuclear launch codes?

Narrator: They did, in fact, hand over the codes immediately.

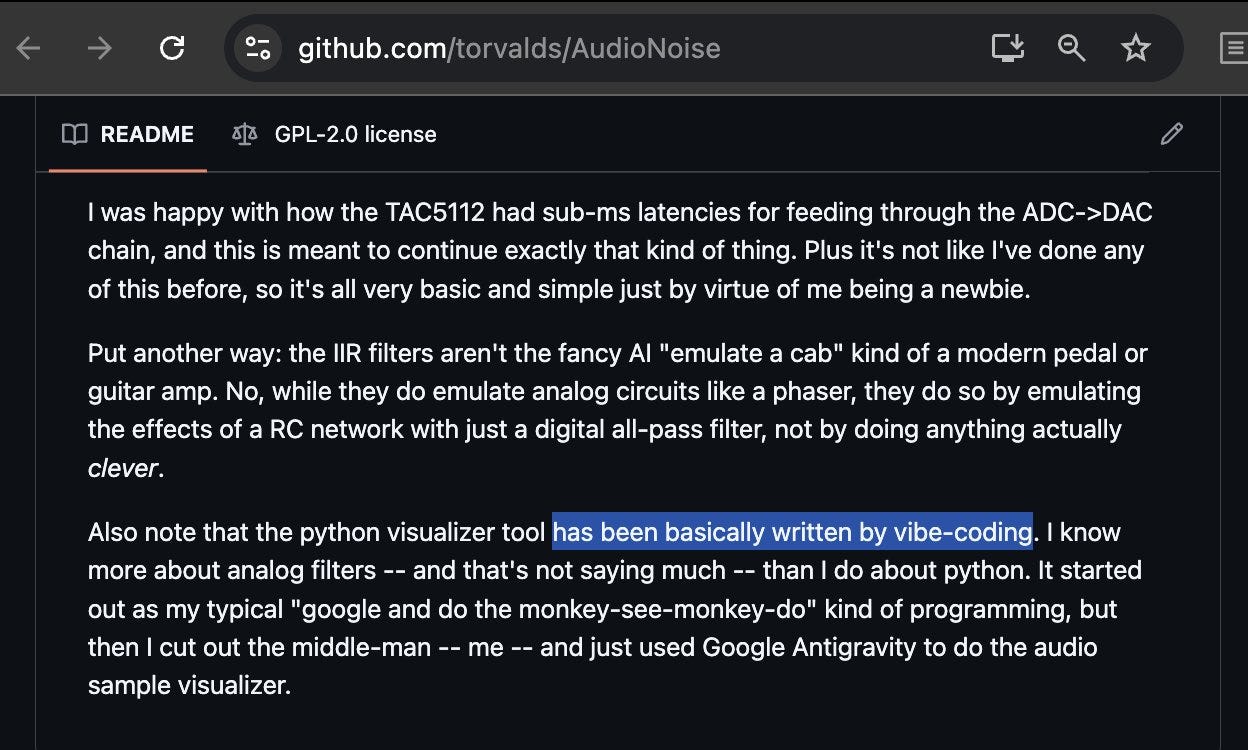

Linus Torvalds is now vibe-coding

For those who don’t know him, he’s been the creator and lead developer of the Linux kernel since 1991.

Space Computing

Lots of people are now sold on this idea, including Musk, Bezos, and Sundar Pichai.

Miscellaneous

This simple design change could finally fix solid-state batteries https://www.sciencedaily.com/releases/2026/01/260108231331.htm

Pentagon bought device through undercover operation some investigators suspect is linked to Havana Syndrome https://edition.cnn.com/2026/01/13/politics/havana-syndrome-device-pentagon-hsi

UK to develop new deep strike ballistic missile for Ukraine https://www.gov.uk/government/news/uk-to-develop-new-deep-strike-ballistic-missile-for-ukraine

A new study suggests it may be possible to regenerate cartilage lost to aging or arthritis with an oral drug or local injection, rendering knee and hip replacement unnecessary. https://news.stanford.edu/stories/2025/11/joint-cartilage-aging-osteoarthritis-therapy-research

Scientists detect the lowest mass dark object currently measured - an exotic concentration of dark matter? https://www.mpg.de/25518363/1007-asph-astronomers-image-a-mysterious-dark-object-in-the-distant-universe-155031-x

To be even minimally competent in any intellectual field requires having a bedrock of core knowledge memorized. So you *don’t* have to think about it. For math, far more than times tables. https://intellectualtakeout.org/2015/09/why-its-still-important-to-memorize/

These are exciting times for proof formalization (via LLMs + proof assistants, an ideal combination of a bullshit artist and the most persnickety of all critics - now here's a comedy duo that is actually useful) but one shouldn't oversell things. Yes, things may be progressing fast enough that some hype will probably become true soonish, thanks to lots of hard human work, but one should try to be precise.

Right now the aim is for Aristotle to automatize the translation of a blueprint (a human-produced document: basically, a human-generated proof readable by humans, but broken down into tiny lemmas in a hyperpedagogical way - 2 pages can become 6, say) into Lean. (You will still need a human to verify that the Lean *statements* -- in particular the statements of the main theorems -- reflect the corresponding statements in human language.) Aristotle seems to work, in practice, very roughly half of the time. Sometimes it finds ridiculously complicated solutions - itself impressive after a fashion - because it cannot translate the human proof in full, not because it is incorrect, but due to gaps in the math libraries. In particular, there's still a lot of work to be done in analysis - key parts of the advanced undergraduate curriculum are still missing (measure theory), and that is of course a subset of what one needs for research.

Linus Torvalds

https://en.wikipedia.org/wiki/Linus_Torvalds

Invented Linux *AND* Git — out of which came GitHub, CS Code and more.

Has anybody given the world more powerful tools?