Links for 2026-01-06

Stochastic parrots never die

Since there are still people talking about “stochastic parrots” that merely copy from their training data, let me quickly say that we’ve long moved beyond LLMs.

Modern systems like Claude Code are closed-loop systems “grounded” in a hard reality: compilers, unit tests, linters, benchmarks, mathematical checkers, and other evaluators. Coding agents don’t just “guess” code. They write it, run it, observe failures, and iterate. In other words, they have something older ML systems lacked: tight feedback loops that let them reliably converge on working code rather than a plausible-sounding one.

And once you wrap an LLM in a search-and-evaluate harness (like evolutionary or population-based optimization), you get something even more important: systematic exploration. At that point, the model isn’t “recalling” a solution so much as proposing candidates that are filtered by reality. The LLM supplies strong priors (intuition) that prune the search space, while the harness supplies the reasoning pressure by checking what actually works.

This is why systems in the AlphaEvolve family are a big deal: they can generate candidate programs, measure them, keep improvements, and repeat. Google explicitly stated that AlphaEvolve was used to optimize the code for the very TPUs (AI chips) and kernels used to train the Gemini models themselves. The AI literally optimized its own “brain” and “nervous system.” The novelty comes from the interaction between a generative prior and an objective evaluator, not from magical memorization. The intelligence emerges from the system, not just the large language model.

Furthermore, when a system like AlphaEvolve explores a problem and finds a novel solution, the traces are synthetic data that can be fed back into the training loop to make the base model inherently smarter. In agentic synthetic training, the model learns from a curated set of perfect trajectories. The system records every “thought” (Chain of Thought) and every tool call the agent made to get there. Claude Code generates millions of clean, verified coding traces that are almost certainly used to make or “Claude 5” better at coding out of the box.

P.S. Also, don’t forget that compression is intelligence. If you compare the size of the training data to the size of the weights of the model, you get huge compression ratios (320:1 for Llama 3). To fit all of human knowledge into a 140-gigabyte “container,” the model cannot simply copy-paste. Whatever LLMs are doing, it is overwhelmingly compressed abstraction, not simple storage of everything they’ve seen.

KernelEvolve

Meta has an agentic kernel engineer called KernelEvolve that reduces kernel development time “from weeks to hours.” The kernels it produces are competitive with expert-written implementations.

KernelEvolve is operating continuously in Meta’s production infrastructure, generating optimized kernels for hundreds of models serving billions of users daily.

This translates to multi-million-dollar reductions in infrastructure operating costs while simultaneously enhancing user engagement metrics that correlate directly with advertising revenue.

KernelEvolve isn’t “an LLM that writes kernels.” It’s a closed-loop system that treats kernel optimization like a search problem. It is an agentic kernel-coding framework that takes a kernel specification and then generates, tests, profiles, debugs, and iteratively improves candidate implementations across multiple programming layers.

Read the paper: https://arxiv.org/abs/2512.23236

ALE-Agent outperformed 804 human participants in a 4-hour real-time optimization contest.

Sakana AI’s optimization agent “ALE-Agent” took 1st place in AtCoder Heuristic Contest 058 (AHC058), beating 804 human participants. This is the first known case of an AI agent winning a major real-time optimization programming contest.

AHC problems are time-limited “write-a-solver” contests where the goal is to produce a high-scoring heuristic for a complex optimization problem (often reflecting real industrial planning/logistics-style constraints).

The agent extracts insights from its trial-and-error trajectory and reflects them in the next improvement cycle, allowing it to escape local optima that typically trap simpler algorithms.

The total compute and API cost for the 4-hour session was approximately $1,300.

Read more: https://sakana.ai/ahc058/

AI

NVIDIA Announces Alpamayo Family of Open-Source AI Models and Tools to Accelerate Safe, Reasoning-Based Autonomous Vehicle Development https://nvidianews.nvidia.com/news/alpamayo-autonomous-vehicle-development

NVIDIA Releases New Physical AI Models as Global Partners Unveil Next-Generation Robots https://nvidianews.nvidia.com/news/nvidia-releases-new-physical-ai-models-as-global-partners-unveil-next-generation-robots

Boston Dynamics & Google DeepMind Form New AI Partnership to Bring Foundational Intelligence to Humanoid Robots https://bostondynamics.com/blog/boston-dynamics-google-deepmind-form-new-ai-partnership/

In China, A.I. Is Finding Deadly Tumors That Doctors Might Miss — “All of those patients had come to the hospital with complaints like bloating or nausea and had not initially seen a pancreatic specialist, Dr. Zhu said. Several of their CT scans had raised no alarms until they were flagged by the A.I. tool. “I think you can 100 percent say A.I. saved their lives,” he said.” https://www.nytimes.com/2026/01/02/world/asia/china-ai-cancer-pancreatic.html [no paywall: https://archive.is/QvsBv]

AI-generated sensors open new paths for early cancer detection https://news.mit.edu/2026/ai-generated-sensors-open-new-paths-early-cancer-detection-0106

Here’s proof that Claude Code can write an entire empirical polisci paper. https://github.com/andybhall/vbm-replication-extension

Code World Model: Building World Models for Computation https://www.youtube.com/watch?v=sYgE4ppDFOQ

MiniMax M2.1 is open source - SOTA for real-world dev workflows and agentic systems https://www.minimax.io/news/minimax-m21

Claude Code is about so much more than coding https://www.transformernews.ai/p/claude-code-is-about-so-much-more

“Claude Wrote Me a 400-Commit RSS Reader App” https://www.lesswrong.com/posts/vzaZwZgifypbnSiuf/claude-wrote-me-a-400-commit-rss-reader-app

What Drives Success in Physical Planning with Joint-Embedding Predictive World Models? https://arxiv.org/abs/2512.24497

Large language models and the entropy of English https://arxiv.org/abs/2512.24969

Wait, Wait, Wait... Why Do Reasoning Models Loop? https://arxiv.org/abs/2512.12895

Researchers at the University of Waterloo have discovered a method for pretraining LLMs that is both more accurate than current techniques and 50% more efficient. [PDF] https://openreview.net/pdf?id=6geRIdlFWJ

SWE-EVO: Benchmarking Coding Agents in Long-Horizon Software Evolution Scenarios https://arxiv.org/abs/2512.18470

PostTrainBench: Measuring how well AI agents can post-train language models

MoReBench: Evaluating Procedural and Pluralistic Moral Reasoning in Language Models, More than Outcomes https://morebench.github.io/

Exploring a future where AI handles the execution, shifting our primary role from technical "doers" to high-level "directors" and critics. https://fidjisimo.substack.com/p/closing-the-capability-gap

Oversight Assistants: Turning Compute into Understanding https://www.lesswrong.com/posts/oZuJvSNuYk6busjqf/oversight-assistants-turning-compute-into-understanding

In Ukraine, an Arsenal of Killer A.I. Drones Is Being Born in War Against Russia https://www.nytimes.com/2025/12/31/magazine/ukraine-ai-drones-war-russia.html [no paywall: https://archive.is/EzScy]

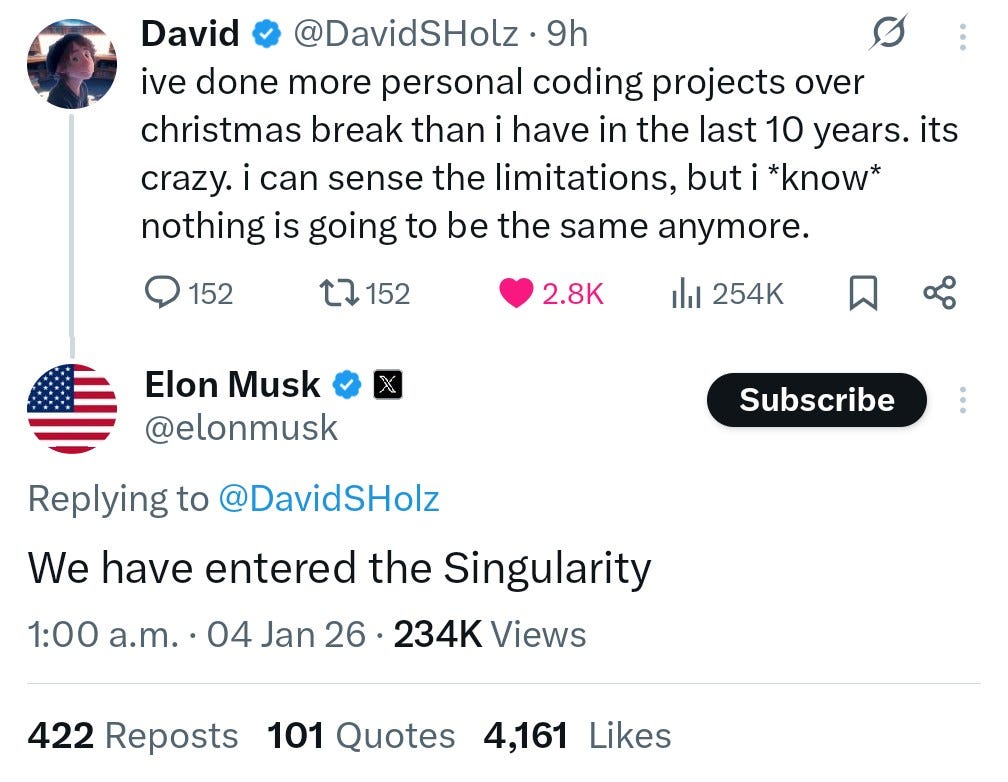

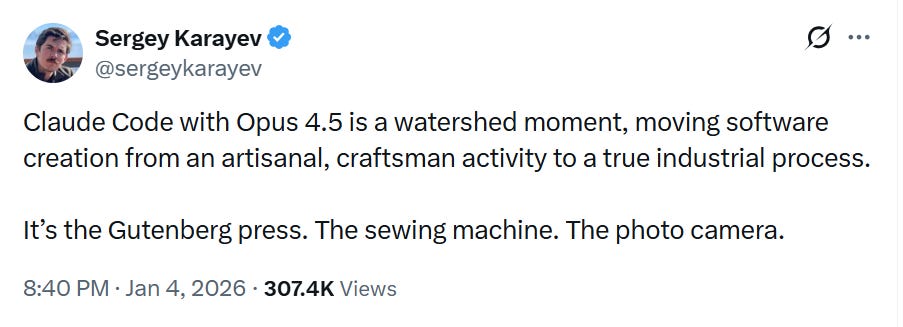

Many people have been making similar statements recently. Of course, we haven’t entered the Singularity yet. But things are certainly accelerating. The more useful this technology becomes, the more money and human resources will flow into improving it, creating a positive feedback loop. Things will turn truly singularatish once these tools start to improve themselves without human intervention meaningfully. This might possibly happen as early as 2028.

Neuroscience

When will we be able to decode a non-trivial memory based on structural images from a preserved brain? https://neurobiology.substack.com/p/when-will-we-be-able-to-decode-a

Your Brain Doesn’t Command Your Body. It Predicts It. https://www.youtube.com/watch?v=RvYSsi6rd4g&t=2s

Miscellaneous

Information Without Rents: Mechanism Design Without Expected Utility — The economic idea that “private information equals profit” depends entirely on the assumption that people calculate risk using standard Expected Utility. Once you remove that assumption, a clever Designer can use dynamic steps to strip away all the privacy benefits of sophisticated agents, unless those agents are specifically “Concave” (super cautious) in their preferences. https://cowles.yale.edu/research/cfdp-2481-information-without-rents-mechanism-design-without-expected-utility

Enlightenment Ideals and Belief in Progress in the Run-up to the Industrial Revolution: A Textual Analysis https://academic.oup.com/qje/advance-article/doi/10.1093/qje/qjaf054/8361686

Is the European Union now the world’s most underrated libertarian project? https://cpsi.media/p/the-case-for-a-eu-progress-studies

"Whatever LLMs are doing"

they reiterate the animal grammar of production of the record of the social learning of Homo sp. via mapping that record into a 'reality' sand box, this is also why the muli-modal stuff works surprisngly well

(_reiterate_ as in recapitulate the ontologies.... there's a philosophical dad joke in there BTW) (its gastrulations all the way down the up)

I do take all this "AI" labelled stuff as evidence that intelligence is a myth. Perhaps a stupid one.