Links for 2025-12-31

End-to-End Test-Time Training for Long Context

Long-context language modeling as continual learning.

Instead of a model that stays fixed after training, the authors use a mostly standard Transformer (with sliding-window attention) that keeps learning while it reads. As it processes a long document, it briefly trains on the text it has just seen (via next-token prediction) and stores what matters in its weights, effectively “compressing” the context.

Crucially, they train the model to be good at this on-the-fly learning using meta-learning, so a few small update steps at inference reliably improve performance.

The payoff is a strong speed/scale tradeoff: like RNNs, it has constant inference latency as context grows, yet it scales in quality with context similarly to full attention. And is reported 2.7x faster than full attention at 128K tokens (on an H100). A caveat: because it compresses, it’s worse at exact “needle-in-a-haystack” recall than full attention.

Paper: https://test-time-training.github.io/e2e.pdf

AI

Agent-R1: Training Powerful LLM Agents with End-to-End Reinforcement Learning https://arxiv.org/abs/2511.14460

DEMOCRITUS: A new system designed to build Large Causal Models (LCMs) by extracting and organizing the vast causal knowledge hidden within today’s Large Language Models (LLMs). DEMOCRITUS does not identify true causal effects but creates a browsable hypothesis space. It is essentially a Google Maps for mechanisms. A way to quickly surface candidate confounders, alternative pathways, and related literatures across fields. https://www.arxiv.org/abs/2512.07796

Designing RL curricula for robots is tedious and brittle. But what if LLMs could design the entire curriculum from a natural language prompt? AURA: Autonomous Upskilling with Retrieval-Augmented Agents https://arxiv.org/abs/2506.02507

Universally Converging Representations of Matter Across Scientific Foundation Models https://arxiv.org/abs/2512.03750

An AI model can predict gene expression and interactions between transcription factors that regulate key genes https://www.nature.com/articles/d41586-024-04107-5 (paper: https://www.nature.com/articles/s41586-024-08391-z)

Have You Tried Thinking About It As Crystals? https://www.lesswrong.com/posts/AeDCrYhmDqRkm6hDh/have-you-tried-thinking-about-it-as-crystals

Drawing inspiration from biological memory systems, specifically the well-documented “spacing effect,” researchers have demonstrated that introducing spaced intervals between training sessions significantly improves generalization in artificial systems. https://www.biorxiv.org/content/10.64898/2025.12.18.695340v1.full

Universal Reasoning Model https://arxiv.org/abs/2512.14693

The Bayesian Geometry of Transformer Attention https://arxiv.org/abs/2512.22471

Let the Barbarians In: How AI Can Accelerate Systems Performance Research https://arxiv.org/abs/2512.14806

Terry Tao on the future of mathematics | Math, Inc. https://www.youtube.com/watch?v=4ykbHwZQ8iU

AI contributions to Erdős problems https://github.com/teorth/erdosproblems/wiki/AI-contributions-to-Erd%C5%91s-problems

How far can decentralized training over the internet scale? https://epochai.substack.com/p/how-far-can-decentralized-training

SoftBank has fully funded $40 billion investment in OpenAI, sources tell CNBC https://www.cnbc.com/2025/12/30/softbank-openai-investment.html

2025 AI review by Zhengdong Wang https://zhengdongwang.com/2025/12/30/2025-letter.html

AI Futures Model: A quantitative model predicting AI capabilities. https://blog.ai-futures.org/p/ai-futures-model-dec-2025-update

Machine translation increased international trade by 10%, literally having the same effect as shrinking the size of the world by 25%. [2018] [PDF] https://www.nber.org/system/files/working_papers/w24917/w24917.pdf

Adam Marblestone – AI is missing something fundamental about the brain https://www.dwarkesh.com/p/adam-marblestone

AI hardware

AI memory demand propels Kioxia to world’s best-performing stock https://www.msn.com/en-us/money/markets/ai-memory-demand-propels-kioxia-to-world-s-best-performing-stock/ar-AA1TfV7u

Memory loss: As AI gobbles up chips, prices for devices may rise https://www.npr.org/2025/12/28/nx-s1-5656190/ai-chips-memory-prices-ram

AI Data Centers Demand More Than Copper Can Deliver https://spectrum.ieee.org/rf-over-fiber

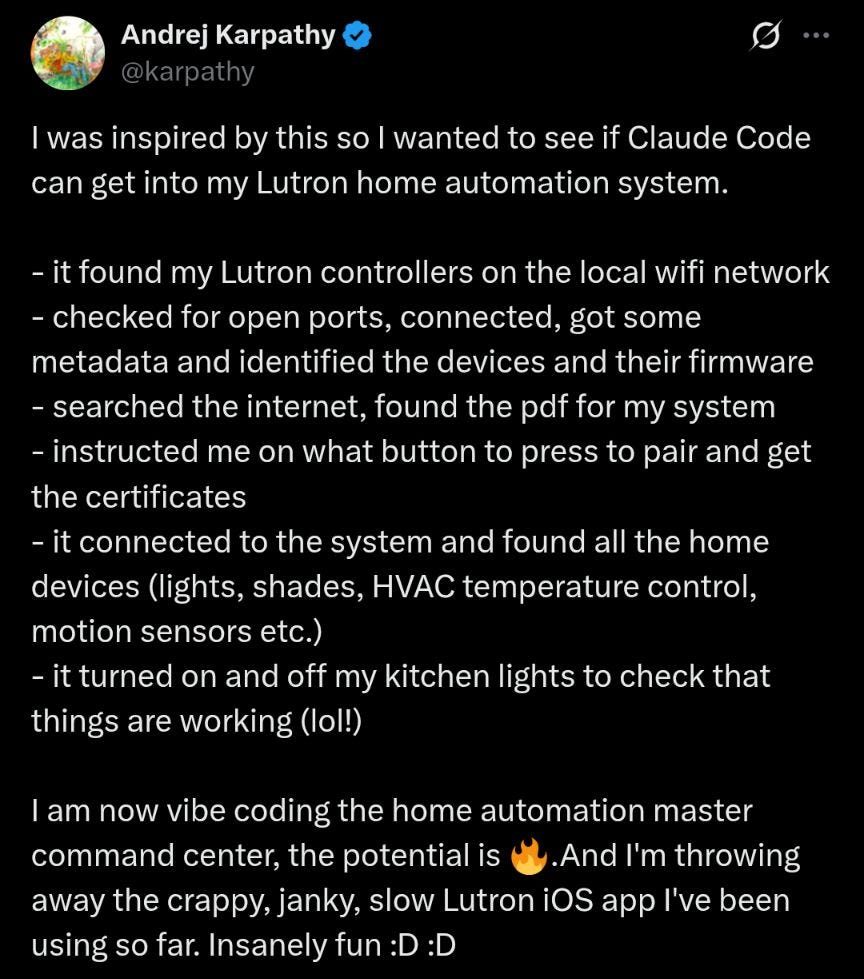

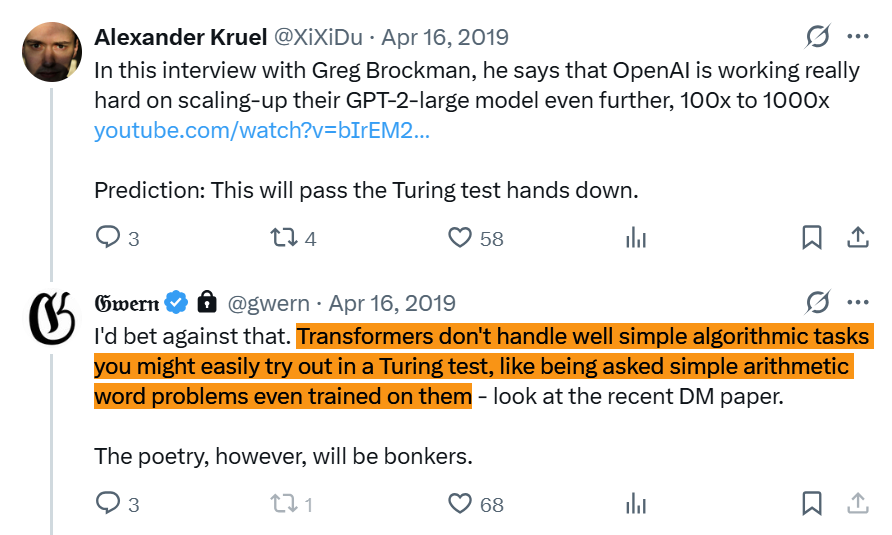

2019 vs. Today

We’ve come a long way.

Back then, the “gotcha” was: ask it a simple arithmetic word problem and it collapses. Today, Fields Medalists are using these models to turn research math into machine-checkable proofs. Models are capable of long-horizon reasoning, multimodal world understanding, and natively use tools for deep web search and code execution. We went from GPT-2 spitting out “code-shaped text” to agents that ship real, multi-file software.

Fasten your seatbelts. The progress between now and 2032 will be greater than the progress between 2019 and today.

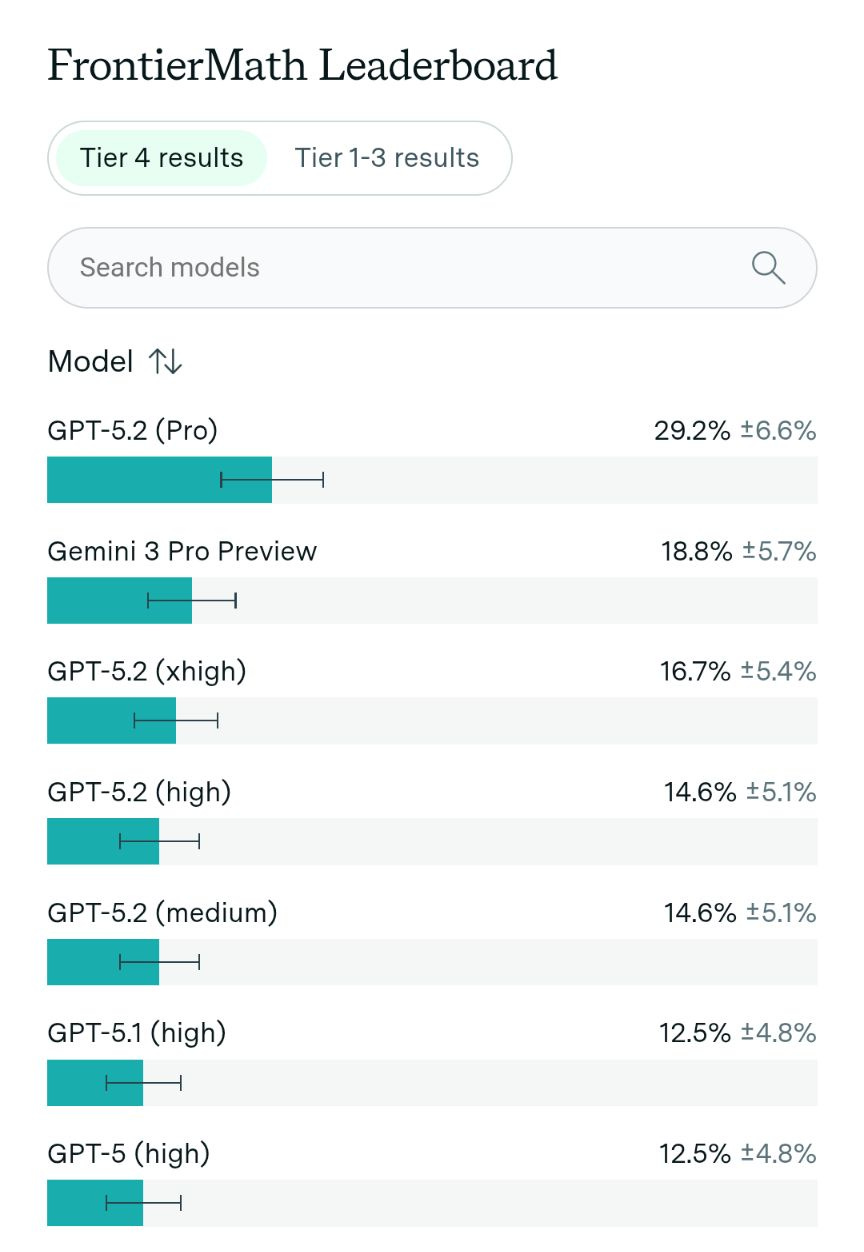

FrontierMath Tier 4

Six months ago, the models were at 4% on FrontierMath Tier 4.

FrontierMath Tier 4 is the most advanced level of the Frontier Math benchmark developed by Epoch Al, consisting of 50 exceptionally difficult, research-level mathematical problems. These unpublished challenges are designed to be so complex that they typically require weeks or months of effort from PhD-level mathematicians to solve, serving as a rigorous test for the reasoning limits of frontier Al models.

Read more: https://epoch.ai/frontiermath

Humans are worse

Almost everything that people criticize LLMs for is worse in humans. The crucial difference is trajectory: while human biology is locked in a glacial evolutionary cycle, AI is improving at a rate that renders today’s limitations temporary bugs rather than permanent features.

Hallucinations? Do you know how unreliable human witnesses are? According to the American Psychological Association, approximately one in three eyewitnesses misidentifies someone in a lineup.

Diminishing returns of ‘test-time compute’? Human cognition hits a ceiling almost immediately. If you give the average person ten hours instead of ten minutes to solve a complex math problem, they don’t become ten times smarter. Instead, they get tired, frustrated, and start looping on the same errors. For humans, more time often leads to overthinking, not a breakthrough.

Changing the wording of a question changes the answer? In humans, subtle changes, such as replacing a single word or changing the order of options, can swing results by 10 to 50 percentage points, effectively reversing the conclusion of a study. Furthermore, partisan cueing is so strong that political party matters more than the actual policy. When people are told that a policy comes from their own party, they tend to support it; if told it comes from the opposing party, they tend to reject it, even if the policy is identical in both cases.

Trouble counting the number of “r”s in the word “strawberrry”? Well, you would also have trouble with this if you saw tokens instead of letters. Humans fall for perceptual and cognitive illusions constantly.

Parameters vs. Synapses?

Something that should be food for thought is that our biggest AI models have parameter counts on the same order of magnitude as the number of synapses in a rat brain, yet they can outperform rats on many cognitive tasks.

Making this even more remarkable is that biological synapses are far more complex than a single weight (chemical signaling, precise timing, and plasticity/local “memory”). And biological brains run on dense feedback loops and recurrent dynamics, whereas standard LLM inference is mostly a feed-forward pass repeated token-by-token rather than continuous real-time recurrence.

Additionally, rats come with a huge evolutionary prior (fine-tuned over hundreds of millions of training years) and embodiment, letting them learn via direct multimodal interaction with the world.

You might argue that the collective written knowledge in LLM training data compensates for some of that, and that digital signals are vastly faster than biological ones.

Still, we’re looking at systems with “rodent-scale” parameter counts that can do things like write code and win a Math Olympiad gold medal. What will systems be capable of as we move toward human-brain-scale connectivity? And what happens once we add persistent memory, continual learning, and robotic bodies?

Science & Technology

Medical breakthroughs in 2025 https://www.scientificdiscovery.dev/p/medical-breakthroughs-in-2025

Dark energy just got even weirder and why the Universe may end in a ‘Big Crunch’ https://www.bbc.com/news/articles/c17xe5kl78vo

This report claims that in some places, under certain circumstances, batteries are now affordable enough to provide solar power when needed. In 2024 alone, average battery prices fell by 40%, and signs indicate that a similar decline is occurring in 2025. Pairing solar with enough batteries to keep the electricity flowing through the night is no longer a distant dream. https://ember-energy.org/latest-updates/batteries-now-cheap-enough-to-deliver-solar-when-it-is-needed/

Large-scale mapping of artificial perceptions for neuroprostheses using spontaneous neuronal activity in macaque and human visual cortex https://www.brainstimjrnl.com/article/S1935-861X(25)00421-8/fulltext

Clinical trials are engines for scientific discovery. Better drugs require not just more trials, but also improved data collection, to create therapeutic feedback loops. https://www.asimov.press/p/clinic-loop

How these strange cells may explain the origin of complex life https://www.sciencenews.org/article/cells-origin-of-life-asgard-archaea

A Timelapse of Satellite Launches: 1957–2025 https://www.youtube.com/watch?v=qJ7O2gigebQ

Exploring Mathematics with Python https://coe.psu.ac.th/ad/explore/

Ukraine

Strikes

I have now logged 289 Ukrainian middle- and long-range strikes and operations since 21 October 2025.

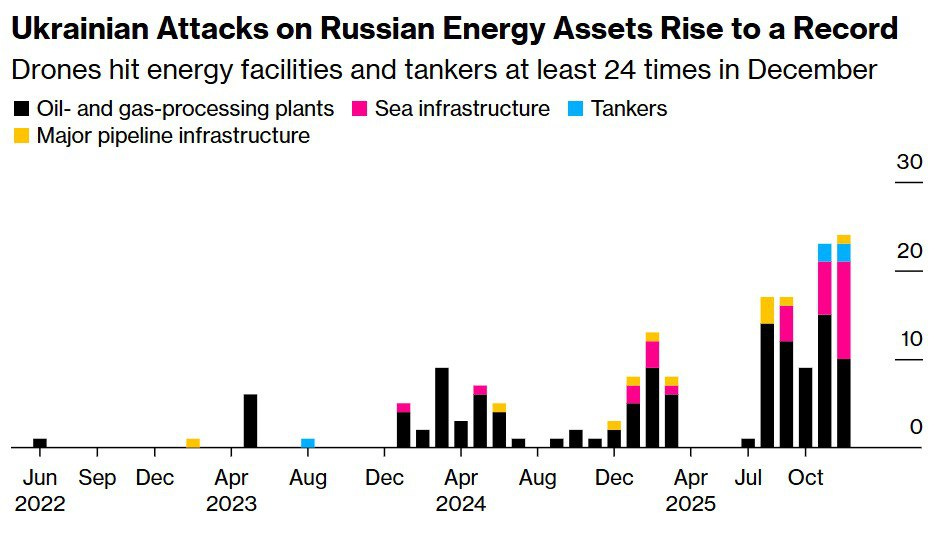

According to Bloomberg, Ukraine has carried out at least 24 (now 26) attacks on refineries, tankers, and maritime and pipeline infrastructure in December, marking the highest monthly frequency since the start of the war. This is pretty much in agreement with my own list.

Economic Impact: The Russian government expects oil and gas revenue to sink to 23% of budgetary income this year, a record low.

My sheet: https://docs.google.com/spreadsheets/d/1kH4qcGw3fREX3jhGO8c9fNmD9ocF_vikutPNsOo33ls/

Bloomberg report: https://www.bloomberg.com/news/articles/2025-12-30/ukrainian-strikes-on-russia-s-energy-assets-hit-a-monthly-record

Russian KIA

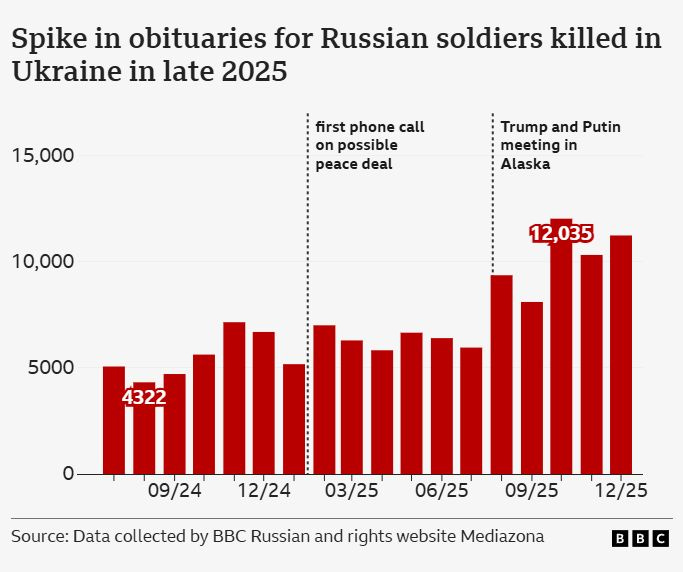

Over the past 10 months, Russian losses in the war with Ukraine have been growing faster than any time since the start of the full-scale invasion in 2022, BBC analysis suggests.

As peace efforts intensified in 2025 under pressure from US President Donald Trump’s administration, 40% more obituaries of soldiers were published in Russian sources compared with the previous year.

Source: https://www.bbc.com/news/articles/c62n922dnw7o

It is estimated that this method captures roughly 45% to 65% of the true Russian death toll. Casualty numbers (KIA+WIA) are much higher.

See also: At least 7495 Russian officers have been eliminated in the Russian invasion of Ukraine since 24 February 2022.

Sources (public Russian obituaries and graves): https://docs.google.com/spreadsheets/u/0/d/1InyFVmu1LoSjqcWTHe4iD9cR8CNiL-5Ke5Jiz_Mlvwc/htmlview

In Baxters books they all had smartpads with an AI called Thales and Democritus :)

Why didn’t you include Gwern’s very recent response to his tweet you mentioned?:

https://x.com/gwern/status/2005722347739963681